Have you ever heard of a vegetable called snap pea? It is a vegetable that tastes sweet and delicious when quickly boiled and eaten. However, snap peas have a big problem. Take a look at the picture below.

At first glance, it is hard to tell where the snap peas are. When I helped my father with his farm work, I had difficulty harvesting snap peas. I ended up walking back and forth across the field many times.

Can I somehow help harvest snap peas? Maybe object detection of snap peas in YOLOv5 could help with the harvest!

YOLOv5 is an object detection algorithm developed by Ultralytics.

YOLOv5 can be machine-learned even by light users, and various innovations have been made to achieve good accuracy. In this article, I used YOLOv5 to create the object detector for snap peas. I have created the object detector in a previous article, but this time I will try to create a more accurate model using a genetic algorithm.

The following describes a series of snap peas object detection methods using YOLOv5. For detailed implementation, please refer to previous articles or the YOLOv5 documentation.

Mounting Preparation

- Install

git clone https://github.com/ultralytics/yolov5 # clone

cd yolov5

pip install -r requirements.txt # install- Trial inference

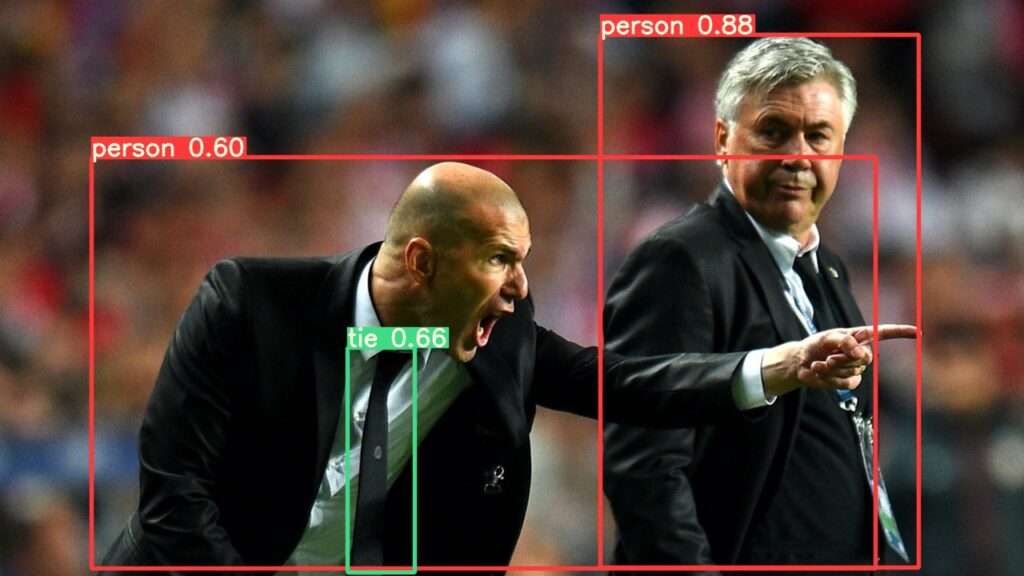

python detect.py --source ./data/images/ --weights yolov5s.pt --conf 0.4The resulting object detection images were saved in the “runs/detect/exp” directory.

If successful, Zidane’s object detection result was saved.🚀🚀🚀

Dataset Preparation

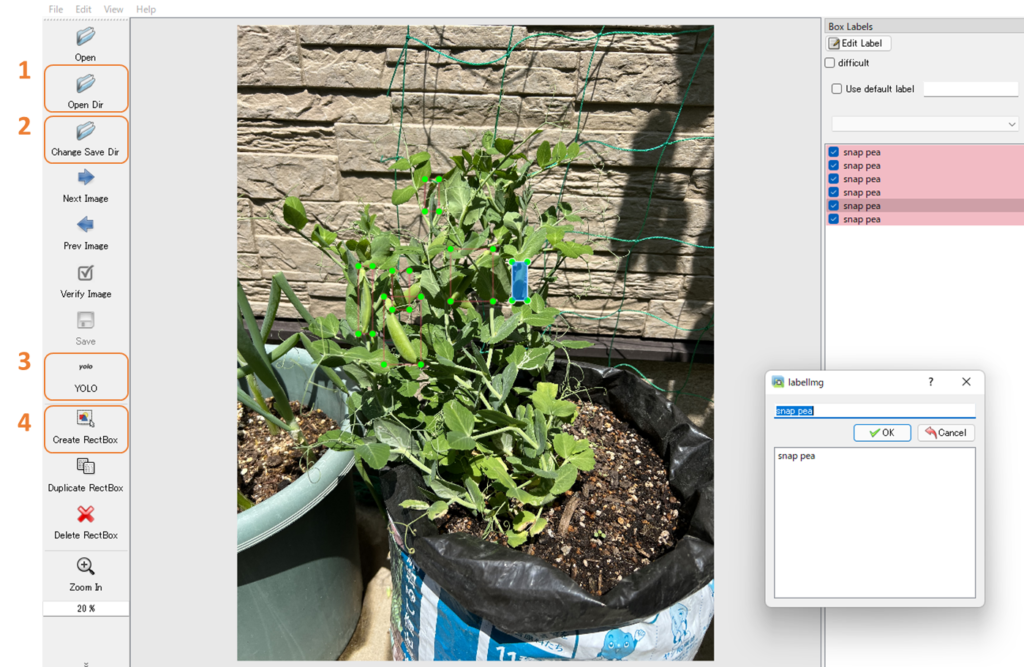

- The Annotation

Machine learning with YOLOv5 requires the collection of many snap pea images and the annotation of snap peas. It is recommended that “labelimg” be used for annotation.

- Open the directory where the images are stored.

- Specify the directory to save annotation text files.

- Specify YOLO as the save format.

- Annotate all snap peas. Save at the end.

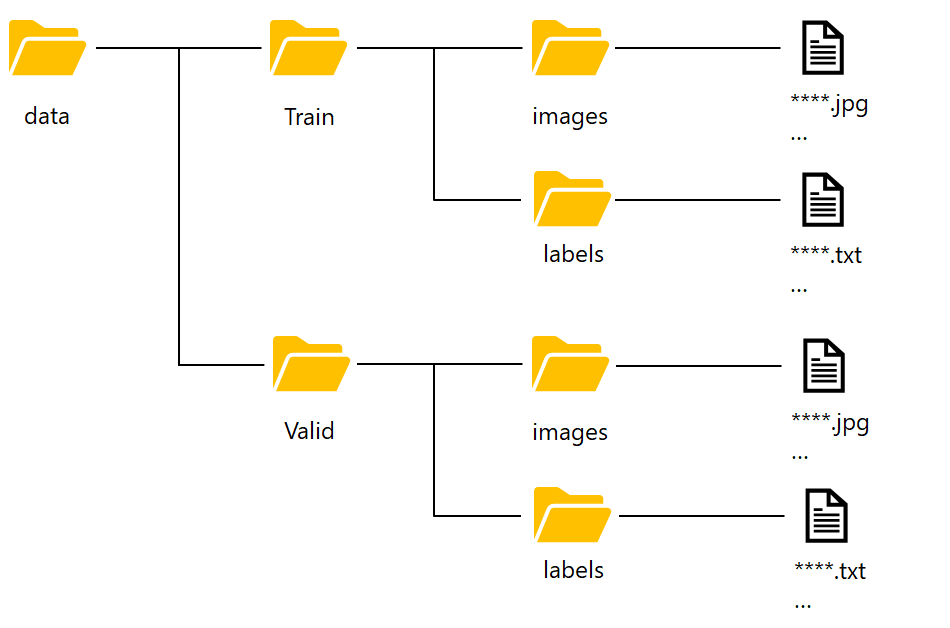

- Dataset Placement

The image data and annotation(labels) dataset should be saved under the directory shown below. The dataset should be divided into training data and validation data and saved.

- Creating “data.yaml” file

Set the path and class of the dataset. Create a “data.yaml” file by entering the following.

train: data/train/images val: data/valid/images nc: 1 names: [‘snap pea’]

Training with YOLOv5

YOLOv5 allows users to easily specify the image size, batch size, pretrained weight, etc. Data augmentations are also automatically configured, designed for easy training even for light users.

You can choose the pretrained weight from the following options that are auto-downloaded from the latest YOLOv5 release.

In this case, I specified a pretrained weight of YOLOv5l and a batch size of 6.

python train.py --data data.yaml --cfg yolov5l.yaml --weights yolov5l.pt --batch-size 6 --epochs 1000Training results are output to the “runs/train/exp” directory.🖥️

Hyperparameter Evolution

YOLOv5 has the ability to perform hyperparameter tuning (evolution) using genetic algorithms. Perform this function.

The default hyperparameters for YOLOv5 are stored in “data/hyps/hyp.scratch-low.yaml”. If you check the contents, you will see those initial parameters such as learning rate (lr) and Data augmentations are set. Optimize these values for this model.

Hyperparameter evolution in YOLOv5 is designed to be easily performed with the following code. Excellent!👍

python train.py --data data.yaml

--weights ./runs/train/exp/weights/last.pt

--epochs 10

--cache

--evolvePlease note that it is very time-consuming, requiring at least 300 generations of evolution for best results. In this case, 640 generations of evolution were implemented.

Hyperparameter Evolution results are output to the “runs/train/evolve” directory.🖥️

Train with the evolved hyperparameters !!👍

python train.py --data data.yaml

--cfg yolov5l.yaml

--weights ./runs/train/exp/weights/last.pt

--batch-size 6

--epochs 100

--hyp ./runs/train/evolve/hyp_evolved.yamlTraining results are output to the “runs/train/exp2” directory.🖥️

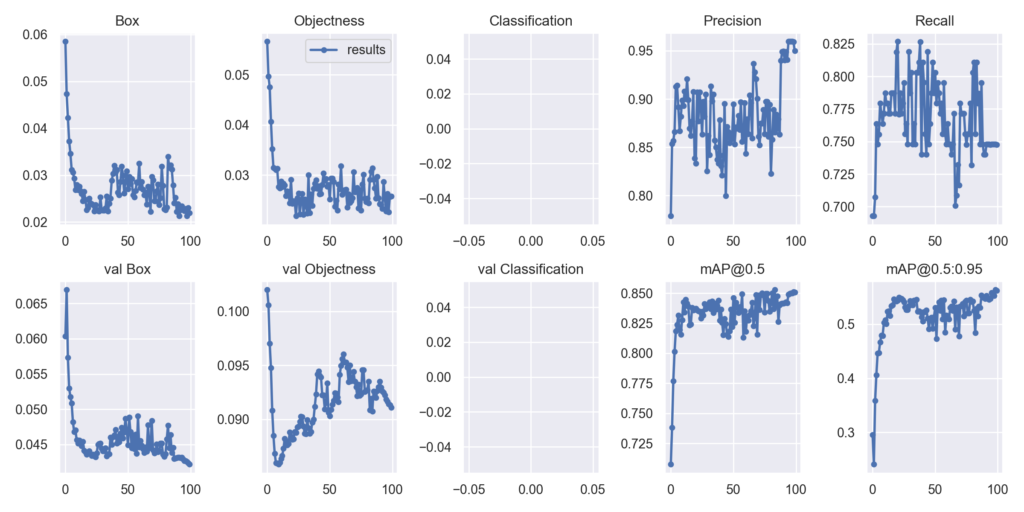

Visualization

YOLOv5 also makes it easy to visualize the results. Check the results.png file in the “runs/train/exp2” directory.

I was able to obtain a score of 0.85 for mAP@0.5, which is a good accuracy model !👍

Inference

Inferences are performed using a model learned by evolving hyperparameters.

File formats supported include images, videos, Youtube, etc. I stored photos and videos taken with my iPhone in the “data/inference” directory and used these data for inference.

python detect.py --source ./data/inference/

--weights ./runs/train/exp2/weights/last.pt

--conf 0.3

--line-thickness 6Successful object detection of snap peas. There was a false positive though. This will make harvesting easier if I can use this to my advantage.👍

Compare inference results before and after hyperparameter evolution

Detection appears to be much improved. Hyperparameter evolution takes time but proves to be very effective.🚀🚀🚀

Conclusion

YOLOv5 object detection training → hyperparameter evolution → re-training using evolved parameters to create a highly accurate model.

YOLOv5 is designed to be easy to implement even for light users. Mechanisms to improve detection accuracy are also pre-designed so that even if the number of samples is not sufficient, a highly accurate model can be created. (Of course, the larger the sample size, the better…)

I encourage everyone to give it a try. Have a good deep learning life today👍

Here are some interesting object detections I have created in the past.

Various uses of YOLOv5

FarmL blog has a variety of examples of YOLOv5 use. Please take a look if you have time. Sorry, Japanese only.

- How to train YOLOv5 using original image data

- How to train YOLOv5 with transfer training on pretrained data

- Parameter optimization method for YOLOv5 using genetic algorithm

- How to crop an object detected image in YOLOv5 and use it as training data

- Count the number of objects detected by YOLOv5 [Object Counter]

コメント